047 – Using Timecode With Live Music

Normally we don't think about timecode in live sound — but it can be an effective tool.

Written by Scott Adamson

When timecode is implemented properly, it’s really effective at getting production elements to operate consistently in time with the music. Many large-scale productions rely heavily on it — but this is mostly done by lighting and video technicians.

Even though it’s not very common in live sound, it’s also possible to have timecode trigger events on our audio consoles. Usually this would be used to recall settings in a scene/snapshot. On pro consoles this could configured to do almost anything - change gains, move faders, unmute, change EQ, insert a plug-in, or change whatever parameters are allowed by that console.

In addition to this post, I shot a quick video about how timecode can be implemented in live music:

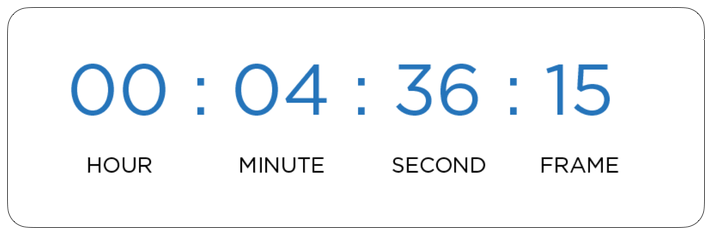

So, what is time code? Standard SMPTE timecode is a string of four numbers that represents a specific frame in video or audio. This is displayed as: 00:00:00:00 (hour:minute:second:frame). As time progresses, the numbers move sequentially by frame like a clock.

In music (and video/TV) production we use linear timecode (LTC), which is SMPTE timecode that’s transmitted as an audio signal. We can record this signal alongside the music in our playback system so that each song has unique timecode. That way any given frame number corresponds to one very specific point in a song.

In multitrack playback setups, LTC is recorded on a separate track in the playback software (Pro Tools, Ableton Live, Digital Performer, etc.). Then it gets a separate output of the interface, which feeds lighting or audio consoles (FYI - it’s standard to just use normal XLR cables for these connections). This lets the playback system trigger actions on the consoles, which keeps everything super in sync with the music.

Get real-world live sound mixing tips straight to your inbox.

Even though it's not something many audio engineers do, I've implemented timecode in some of my shows with some great results. I’ll set the console to recall a snapshot at a specific point in the music, which triggers something like unmuting FX or boosting a fader for a guitar solo. Of course, this only works when I'm developing a show file on a specific console for a band that I'm on tour with, mixing the same show every night.

Usually the first reaction I get from other engineers when I tell them that I do this is: “That's crazy. I wouldn't want my faders moving on their own!” And I totally get that. I mean, really good engineers are comfortable walking up to any console and getting a solid mix going for almost any band. They don’t want anything happening that’s out of their direct control.

But when I have 80+ inputs, with a bunch of things changing during the song, taking advantage of the technology and programming the console ahead of time lets me not worry about some of the minor fader moves. That way I can keep my attention on the big picture and concentrate on the overall sound of the show.